Please pardon my absence, dear readers. Or perhaps I should say, I hope you’re enjoying the silence. It’s been a busy month for two reasons.

First, I am in the last stages of finishing up my book. More on that in the near future.

Second, the hype and spin around artificial intelligence has been on overdrive. We’ve seen the release of GPT-4, unsettling media appearances by OpenAI CEO Sam Altman, glowing reviews from Bill Gates, an alarmed open letter from the Future of Life Institute calling for a 6 month halt to high-level AI development, and a warning about our impending doom from Eliezer Yudkowsky at the Machine Intelligence Research Institute. In response, Italy has banned ChatGPT.

We have been all over these developments on the War Room. (Links posted below.)

Last week, an open letter from the Future of Life Institute was signed by over a thousand “AI experts,” including Elon Musk, Yuval Noah Harari, Stewart Russell, Max Tegmark, and Steve Wozniak, who “call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.”

There is next to zero chance of this moratorium happening, but it never hurts to try. On the whole, their message is halfway sane. The letter raises four important questions that the public should have been pondering for a long time now:

Contemporary AI systems are now becoming human-competitive at general tasks, and we must ask ourselves:

Should we let machines flood our information channels with propaganda and untruth?

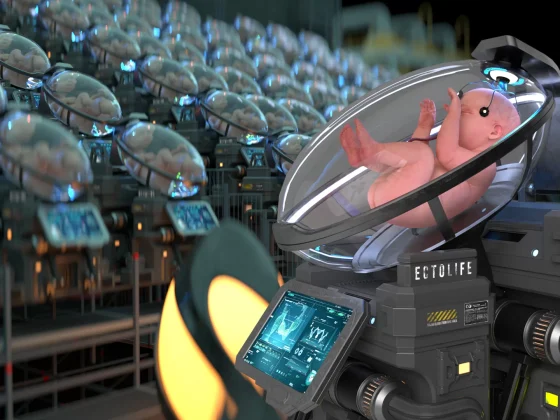

Should we automate away all the jobs, including the fulfilling ones?

Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us?

Should we risk loss of control of our civilization?

That would be a big NO on all four. What sort of lunatic would answer “yes”? Tech accelerationists, of course.

Such decisions must not be delegated to unelected tech leaders. Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable.

I am beyond skeptical that the release of any advanced AI system won’t come with significant downsides. You already see this in the mass psychosis created by the current crop of chatbots and art generators. On the world stage, the long-term geopolitical effects of advanced military AI will be studied for decades to come—that is, if there’s anyone left to study them.

The inevitable downsides are obvious if the signatories’ predictions come to pass. Musk, Harari, Russell, Tegmark—all these men foresee a day when artificial general intelligence becomes smarter and therefore more powerful than human beings. That leaves our fate at the mercy of the Machine. These guys believe AI programmers are creating God in silico.

That’s a big NO from me on that one, too.

Some critics are ready to destroy everything to halt this ungodly locomotive. In a TIME magazine op-ed published the same day as the open letter, Yudkowsky argued that a 6 month pause on AI training is pathetically inadequate. “The moratorium on new large training runs needs to be indefinite and worldwide,” he insists. “There can be no exceptions, including for governments or militaries.”

Despite being a transhumanist himself, Yudkowsky believes that superintelligent AI poses an existential risk to humanity, far worse than nuclear weapons. He demands that authorities “shut it all down.” If that means launching airstrikes on data centers operating on foreign soil—at the risk of nuclear war—then so be it:

Shut down all the large GPU clusters (the large computer farms where the most powerful AIs are refined). Shut down all the large training runs. Put a ceiling on how much computing power anyone is allowed to use in training an AI system, and move it downward over the coming years to compensate for more efficient training algorithms. No exceptions for governments and militaries. Make immediate multinational agreements to prevent the prohibited activities from moving elsewhere. Track all GPUs sold.

If intelligence says that a country outside the agreement is building a GPU cluster, be less scared of a shooting conflict between nations than of the moratorium being violated; be willing to destroy a rogue datacenter by airstrike.

Let’s hear it for Terran Yudkowsky, the Transhuman Luddite!

Read the rest here: