By Lily

Largely unnoticed by the public, the EU Commission is working with the support of European governments to abolish digital privacy of correspondence. Under the pretext of combating child abuse, a regulation is to be adopted that will make it compulsory for providers of communications services of all kinds to search communications content for depictions of child abuse or attempts by adults to approach children (so-called “grooming”). The problem is: This is the trojan horse to out-lever any encryption of digital communication and all privacy.

WHAT IT MEANS

Client-side scanning compromises the confidentiality of correspondence, which used to be provided by end-to-end encryption for communications services. Now, the data is searched without any given suspicion before encryption. Apple intended to implement a similar procedure on its devices, despite the opposition of renowned IT security researchers. The latter concluded in a study that client-side scanning poses a threat to privacy, IT security, free speech, and democracy in general.

The current EU draft does not specify any technical measures that are always required to be administered. It leaves the enforcement of these measures to a new authority that will be based at Europol and will be able to issue orders to individual providers. In other words, a Europe-wide police monitoring center with broad powers to intervene in the confidentiality of correspondence will be established.

THE ISSUES WITH AI

At the same time, these AIs are — at the time of writing — highly error-prone. The concept behind this, “Neural Hashes“, originated from Apple and tries to determine logical fingerprints of content and then compare them with criminal content.

In practice, it has already been possible to manipulate the system in both directions on several occasions. Illegal content could be minimally manipulated in such a way that the software considered the content to be unproblematic. On the other hand, it has also been possible to manipulate completely legal content in such a way that the AI thought it was criminal content. In fact, there is also a basic error rate of 86%. So, the pictures from your beach vacation or your grandson taking a bath may end up on Europol’s desk because your cell phone thinks it is pornography.

This opens the floodgates for a whole new array of abuse by security authorities. In the past, authorities have already misused data that has been collected. For example, during the pandemic, data from restaurants was unlawfully misused by the police.

Civil rights organizations are vehemently opposed to the current draft, as it is incompatible with European fundamental rights, could render encryption obsolete, and calls into question the anonymity of Internet use. Even child protection organizations, such as the German Child Protection Association, believe the proposed chat control measures are excessive—probably because this is not really meant to deal with the issue of child abuse.

Regardless of how chat control is implemented, it represents a serious violation of human rights. As with other proposed laws, the objective of restricting the dissemination of depictions of child abuse is merely a pretext. Instead, the objective is to construct a surveillance apparatus with expandable censorship options.

Expanding censorship tools after they have been created is nothing new for the European Union: the upload filters introduced as part of the EU copyright reform were also planned as part of the TERREG directive to filter terrorist content, but failed due to public opposition.

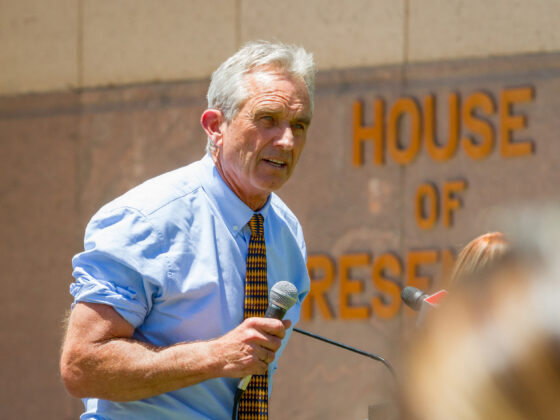

POLITICIZING ABUSE VICTIMS TO PROTECT THE EMPIRE FROM DISSIDENTS

Systems that search for depictions of child abuse are susceptible to misuse. They can also detect other content, as it makes no difference from a technical standpoint whether they are searching for images of child abuse or other undesirable visual material for political reasons.

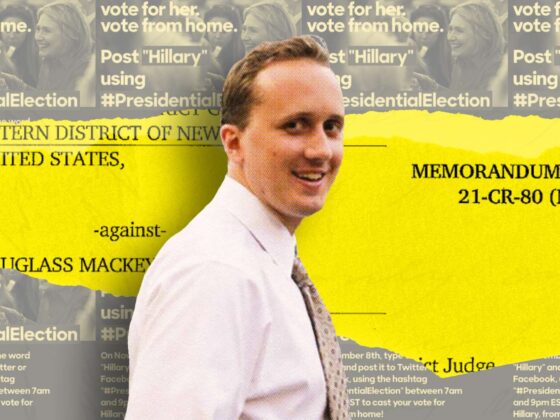

Instances in which content is incorrectly identified as a hit, known as “false positives,” also pose a threat to free speech. Content screening systems are imperfect and susceptible to manipulation. Apple’s “NeuralHash” system for recognizing photos, which calculates checksums from images that can then be matched, is one example. Existing software can alter an image so that it has the same checksum as an entirely different image.

When depicting child abuse, this may result in harmless, legal images being identified as hits and reported. This is especially tragic because, in the case of depictions of child abuse, mere suspicion can be sufficient to destroy the reputation and lives of the accused. In addition, intelligence agencies with the necessary authority could place images on targets’ computers or manipulate harmless images so that sending them would generate a report.

The obligation to monitor communications would also impact the plethora of open-source and decentralized applications. Even though these cannot easily be hacked, the EU could ban them or block them via network blocking. Distributors of such software could face severe penalties.

Furthermore, a system like this is basically useless against organized crime. To date, there has been no reliable study showing that comprehensive surveillance ultimately prevents crime because crime always behaves differently.

HOW DOES IT AFFECT YOU?

- All of your chat conversations and emails will be searched for suspicious content automatically. Nothing is kept private or confidential. There is no need for a court order or even an initial suspicion to search your messages. It constantly happens automatically.

- Your private or intimate photos may be viewed by staff and contractors of international corporations and police authorities if an algorithm classifies the content of a message as suspicious. Your private nude photos may also be viewed by people you do not know, in whose hands your photos are not safe.

- Because text recognition filters looking for “child grooming” frequently flag intimate chats, flirts and sexting may be read by staff and contractors of international corporations and police authorities.

- You can be falsely reported and investigated for allegedly disseminating material containing child sexual exploitation. Messaging and chat control algorithms, for example, are known to flag perfectly legal vacation photos of children on a beach. According to Swiss federal police authorities, 86% of all machine-generated reports are invalid. In Germany, 40% of all criminal investigation procedures initiated for “child pornography” target minors.

- Expect major issues on your next international trip. Machine-generated reports on your communications may have been sent to countries with no data privacy laws, such as the United States, with uncomfortable consequences.

- Intelligence services and malicious hackers may be able to listen in on your private conversations and emails. If secure encryption is removed in order to screen messages, the door will be open for anyone with the technical means to read your messages — this is not limited to just government authorities.

- This is just the beginning. Once the technology for messaging and chat control has been established, it is simple to apply it to other applications. And who can guarantee that these incrimination machines will not be used on our smartphones and laptops in the future?

PARASITIC HYPOCRITES LEVERAGING ON CHILDREN’S SUFFERING

Faced with enormous social inequality and rising food and energy prices as a result of the Ukraine conflict, chat control is being positioned as a tool to suppress anticipated resistance and protests through undemocratic means.

After the introduction of this chat control, it is likely that the systems will be expanded to detect and automatically report depictions of police violence, demonstrations or unauthorized protests, anti-government ideas, and even satirical content.

In addition, the plans reveal the true nature of the EU: this organization, whose stated mission is the unification of the European continent under the banner of freedom and democracy, is developing the surveillance techniques of a dictatorship. The European Union is a communist confederation of states that assists its member states in imposing anti-democratic measures.

The issue with the systematic abolition of digital liberties is more complex. Even if the systems are “only” used for comparatively “good” purposes today, they will remain in place indefinitely. Just like the data itself. People must understand that the NSA or other intelligence agencies have saved every byte of data they could get ahold on. It’s not like the Simpsons portrayed a massive hall of federal agents listening in on your conversations in real time. Your data is intercepted and either immediately scanned or stored to be scanned later or whenever the batch of data is due to be scanned by automated processes. Every day of your life, you are sitting in a database, waiting to be looked at.

The Taliban’s acquisition of biometric data from the US military should make the problem clear to everyone: there is no such thing as secure data. If data is available, it will always be misused.