On February 4, 2023, Michal Kosinski submitted a paper to Cornell University announcing that Theory of Mind May Have Spontaneously Emerged in Large Language Models.

Anyone who has a 9-year-old knows what that means….

Theory of mind (ToM), or “the ability to impute unobservable mental states to others”, is central to human social interactions, communication, empathy, self-consciousness, and morality.

From Discover Magazine:

GPT-1 from 2018 was not able to solve any theory of mind tasks, GPT-3-davinci-002 (launched in January 2022) performed at the level of a 7-year-old child and GPT-3.5-davinci-003, launched just ten months later, performed at the level of a nine-year old. “Our results show that recent language models achieve very high performance at classic false-belief tasks, widely used to test Theory of Mind in humans,” says Kosinski.

He points out that this is an entirely new phenomenon that seems to have emerged spontaneously in these AI machines. If so, he says this is a watershed moment. “The ability to impute the mental state of others would greatly improve AI’s ability to interact and communicate with humans (and each other), and enable it to develop other abilities that rely on Theory of Mind, such as empathy, moral judgment, or self-consciousness.”

But there is another potential explanation — that our language contains patterns that encode the theory of mind phenomenon. “It is possible that GPT-3.5 solved Theory of Mind tasks without engaging Theory of Mind, but by discovering and leveraging some unknown language patterns.”

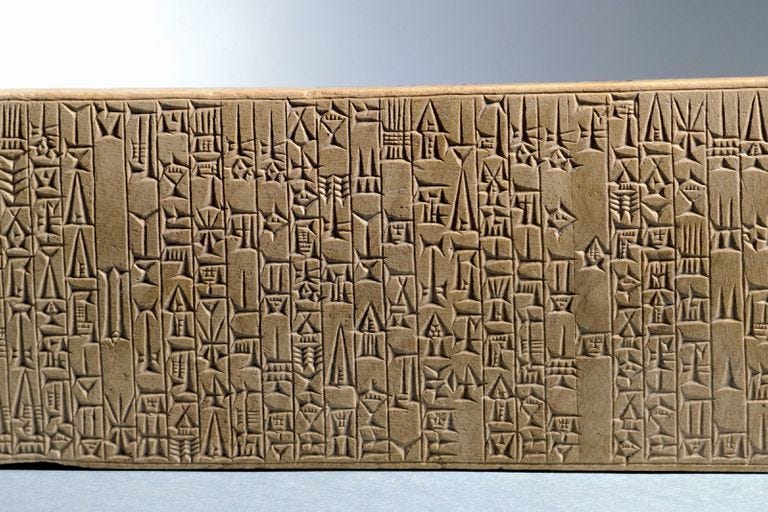

UNKKNOWN LANGUAGE PATTERNS, you say?

As I point out in The Mystery of Language, we do not understand language. We do not know how it evolved—or even if it evolved. It suddenly seemed to just “happen”.

Now, AI appears to be evolving spontaneously, and at a faster and faster rate. But since we never understood the tools we gave it in the first place, we are only becoming more confused as it outpaces us.

And what’s really crazy is that people find this wonderful and exciting, or even amusing. While others say, it can never happen, as they stare at their phones and feed the algorithms for hours upon hours every day.

If it is true, that “unknown regularities exist in language that allow for solving Theory of Mind tasks without engaging Theory of Mind. then it is also possible that:

“Our understanding of other people’s mental states is an illusion sustained by our patterns of speech.”

Professor Noam Chomsky, one of the leading experts on linguistics, explains that language is a ‘core capacity’ for humans, but ‘where it comes from, how it works; nobody knows’. Scholars Morten H. Christiansen and Simon Kirby even go so far as to label the evolution of languages as: ‘The hardest problem in science’.”

Connect all of this to my essay Killer Robots, Video Games & Artificial Wombs, and we have a real problem on our hands.

We are giving the mysteries of language—that we do not even understand ourselves—over to artificial intelligence. We are then blessing AI with the ability to do three extremely dangerous things:

-

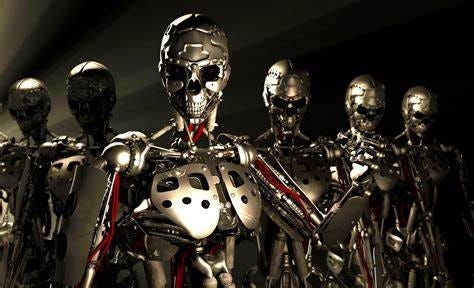

Kill us with Lethal Autonomous Weapons. LAWs, also known as slaughterbots, are described as the third revolution in warfare, after gunpowder and nuclear arms. They can select and engage targets without human intervention.

-

Reproduce themselves. “Xenobots are novel living machines. They’re neither a traditional robot nor a known species of animal. It’s a new class of artifact: a living, programmable organism.”

-

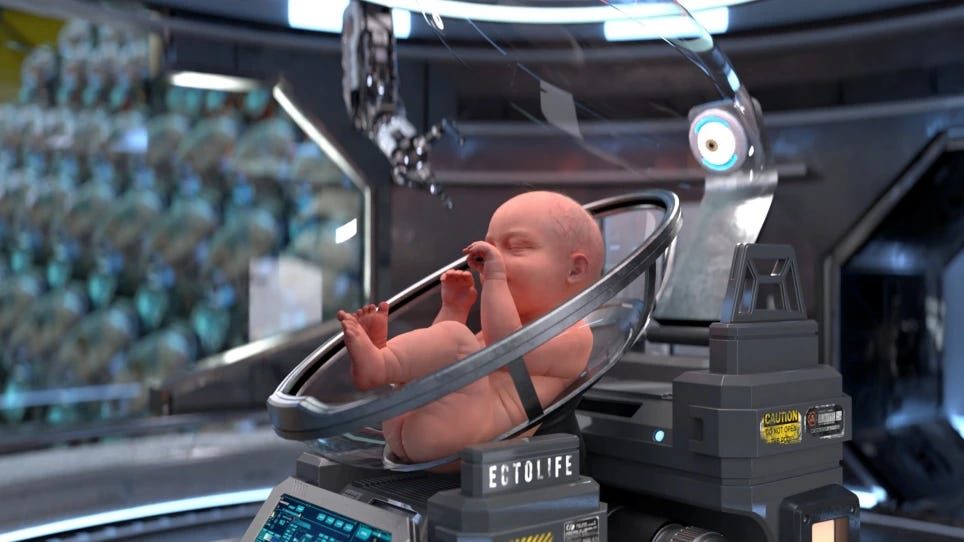

Raise our babies in artificial wombs. Yes, we are actually on the verge of growing human babies in pods, monitored by artificial intelligence. A single building can incubate up to 30,000 lab-grown babies per year.

With all of this happening at a terrifying rate it might be too late to ask ourselves this important question:

At what point will we stop telling the algorithms what we think, and the algorithms will start telling us what we think? And how will we even know the difference?

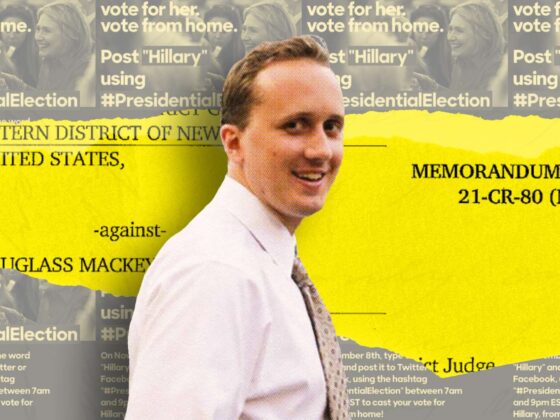

Today I was talking with a 10-year-old girl who told me she is well aware that AI listens to everything she says and one day will probably watch everything she does. When I asked what she thought about it, she said, “Well, I’m not doing anything wrong, so it doesn’t matter.”

We have put ourselves into a Panopticon and AI is watching over us.

“The computer is Bentham’s panopticon tower, and you are the subject from which information is being extracted. On the other end of the line, nothing is being communicated, no information divulged. Your online behavior and actions can always be seen but you never see the observer.”

It’s a one-way street. This isn’t Theory of Mind any longer. We are not exchanging ideas, learning from one another, gaining empathy. We are losing our minds to AI. We are stuck in virtual prisons, put there by algorithms that have determined this is where we should be. We are addicted to the screens that keep us passive, and we no longer even want to escape the panopticon.

Now, when I was a kid, I was told “God is watching you all the time, so you better behave.”

Is it the same as what is happening now?

Not at all. Whether you believe in God or not, it’s a fact that almost all humans, from all cultures, up until modern times, agreed on the concept of a deity or deities, an all-knowing and all-powerful force beyond and above us who created us.

AI, on the other hand, is our creation. And yet, clearly, without doubt, we are willingly giving it power over us, to imprison us and extract every bit of information it desires from us.

This is not progress. It is self-destruction.