“It’s hard to fathom how much human-level AI could benefit society, and it’s equally hard to imagine how much it could damage society if built or used incorrectly.” statement by OpenAI

We brought this on ourselves. We didn’t realize what we were doing. It was so convenient to have all these apps telling us what to do, all that information at our fingertips, never getting lost on a trip across town. So much fun to play all those video games.

For years, we passively gave up our data to men who built empires of unimaginable wealth and power out of our hopes and fears, our mental and physical health, and our basest desires to own the latest cool products.

I have a sense of urgency in putting this information out there, so that people can at least arm themselves with knowledge.

Which is why I’m now harping on ChatGPT.

ChatGPT recently went viral for its ability to do everything from code to write essays. The AI company is now backed by Microsoft.

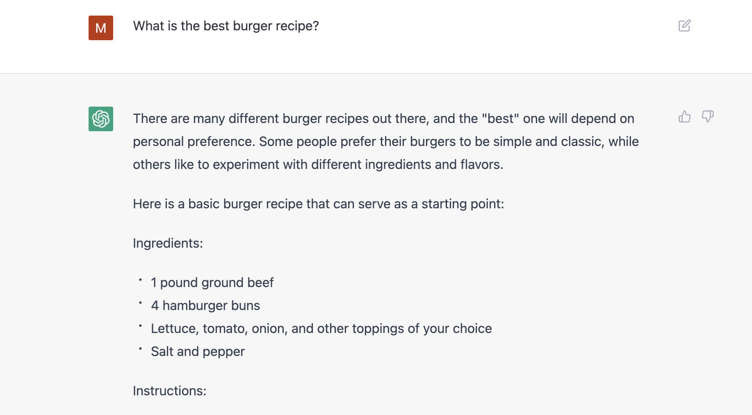

When you ask ChatGPT a question, it comes up with a nuanced answer. Like this:

The far-reaching implications of ChatGPT need to be understood. There is always a dark side to innovation and humans haven’t proved very good at circumventing it.

-

2015: Elon Musk and former Y Combinator president Sam Altman cofounded OpenAI. Along with other entrepreneurs like Peter Thiel and LinkedIn cofounder Reid Hoffman, they aimed to develop artificial intelligence “in the way that is most likely to benefit humanity as a whole.”

-

In 2014, Stephen Hawking warned that artificial intelligence could end mankind. Musk himself has talked of the threats AI poses to humanity.

-

In 2018, three years after helping found the company, Musk resigned from OpenAI’s board of directors.

-

In 2019, the company built an AI tool that could craft fake news stories.

-

At first, OpenAI said the bot was so good at writing fake news that they decided not to release it. Later that year, the company released a version of the AI tool as GPT-2.

-

The company released another chatbot called GPT-3 in 2020.

-

In 2020, Musk said on Twitter that his confidence in the company was “not high” when it came to safety.

Today, Musk said on Twitter that he paused OpenAI’s access to Twitter’s database for training its software.

As I say in my essay, X:

“Twitter is an accelerant to creating the ‘everything’ app.” — Elon Musk

Twitter completes what I call Musk’s Circle of 5:

Twitter + Dogecoin/crypto + Neuralink + Starlink + SpaceX = x

Musk might well be out of his league. Or he might not. His X app may never happen. There are a lot of big players in this game. He is just one of them. He has stolen the limelight and maybe there’s a reason why. That way, what goes on in the shadows can continue, unabated. I am doing what I can to bring light to those shadows.

Keep this most important point in mind, from the points I listed above:”

In 2019, the company built an AI tool that could craft fake news stories.

In February OpenAI catapulted itself into the public eye when it produced a language model so good at generating fake news that the organization decided not to release it. Some within the AI research community argued it was a smart precaution; others wrote it off as a publicity stunt. The lab itself, a small San Francisco-based for-profit that seeks to create artificial general intelligence, has firmly held that it is an important experiment in how to handle high-stakes research.”

I keep saying data is everything. Whoever has the most data rules the world. So, keep in mind that all these guys are in competition with one another. They will make deals and break them and make more deals and break those in their power struggles.

Finding creative ways to lure people into giving up their data was really perfected with Covid testing. To better understand how this all intersects, read this from one of my most important essays The Nefarious Goal Behind Covid Testing:

A CBS News article from January 2021 explains how when Covid first broke in Washington in March of 2020, BGI came to the rescue, proposing to build its labs and help run them. Out of desperation, such offers around the world were accepted. The quest to control our biodata – and, in turn, control health care’s future – is what it’s all about yet governments shrug this off. Why?

Bill Evanina who stepped down in 2021 from his position as the top counterintelligence official in the U.S., a veteran of both the FBI and CIA, was so concerned by BGI’s COVID testing proposals, and who would ultimately get the data, that he authorized a rare public warning: “Foreign powers can collect, store and exploit biometric information from covid tests.”

Evanina describes these Covid tests as the “Trojan Horse” invading our shores.

Supervisory Special Agent Edward You is a former biochemist turned FBI investigator: They are building out a huge domestic database. And if they are now able to supplement that with data from all around the world, it’s all about who gets the largest, most diverse data set. And so, the ticking time bomb is that once they’re able to achieve true artificial intelligence, then they’re off to the races in what they can do with that data.

Jon Wertheim: You’re saying biggest data set wins?

Edward You: Correct.

Think of DNA as the ultimate treasure map, a kind of double-helixed chart containing the code for traits ranging from our eye color to our susceptibility to certain diseases. If you have 10,000 DNA samples, scientists could possibly isolate the genetic markers in the DNA associated with, say, breast cancer. But if you have 10 million samples, your statistical chances of finding the markers improve dramatically, which is why China wants to get so much of it.

Edward You: What happens if we realize that all of our future drugs, our future vaccines, future health care are all completely dependent upon a foreign source? If we don’t wake up, we’ll realize one day we’ve just become health care crack addicts and someone like China has become our pusher.

Bill Evanina: Personal data. Current estimates are that 80% of American adults have had all of their personally identifiable information stolen by the Communist Party of China.

The concern is that the Chinese regime is taking all that information about us – what we eat, how we live, when we exercise and sleep – and then combining it with our DNA data. With information about heredity and environment, suddenly they know more about us than we know about ourselves and, bypassing doctors, China can target us with treatments and medicine we don’t even know we need.

Edward You: Think about the dawn of– the Internet of Things and the 5G networks and the– and smart homes and smart cities. There are going to be sensors everywhere. It’s gonna be tracking your movement, your behavior, your habits. And ultimately, it’s gonna have a biological application, meaning that based on the data that gets collected, they’ll be able to analyze that and look at improving your health. That data becomes incredibly relevant and very, very valuable.

Jon Wertheim: You’re describing data almost as– as a commodity.

Edward You: Data is absolutely gonna be the new oil.

Yes, they keep the focus on China. But all of these companies and the billionaires behind them are in a race to see who can capture the most data.

The tech giants don’t care about the consequences. They conduct their experiments with AI, just like scientists conduct their experiments with dangerous pathogens: “for good.”

Back in 2015, “Lipsitch, professor of epidemiology and director of the Center for Communicable Disease Dynamics at Harvard Chan School, and co-author David A. Relman of Stanford University noted that the U.S. government suspended funding last October for so-called ‘gain-of-function’ research on avian flu. In such research, more contagious forms of the highly pathogenic flu would be created in the laboratory so that scientists could learn more about the genetics required for a flu strain to cause a pandemic.”

And yet, here we are today. As I write in my essay Techno Eugenics:

Back in 1975, a group of prominent scientists determined that ‘cloning or otherwise messing with dangerous pathogens should be off-limits.’ And yet, by 2015, the techniques scientists had theoretically discussed all those years ago had become reality with the gene-editing technique called Crispr-Cas9.

What little the general public knows of CRISPR is confined to the good it can do for humanity. For example, it can potentially treat multiple illnesses, such as myeloma, sickle cell anemia, Huntington’s disease, HIV, to name a few. I say potential because so far, not much of that good has materialized.

On the more bizarre side, the gene-editing tool has been used to create malaria-blocking mosquitoes, muscular beagles, and miniature pet pigs.

On the morally troublesome side, we have the creation of designer babies, invasive mutants, species-specific bioweapons—possibilities that once existed only in the realm of science fiction and nightmares.

What are the rules? Conveniently, there still really aren’t any. The goalposts keep changing as the experiments rush onward. It’s the money and the power that always seems to overcome moral hesitancy.

And now, once again, we are being told the same old story. So much good can be done with this new AI. It will make our lives so much easier. It can free up more of our time to do…what?

Human beings are designed to make decisions, suffer defeats and enjoy triumphs, know the satisfaction of building things with their own hands and facing challenges. Each of us has our owned life experiences and important lessons to learn on this incredible life journey.

What happens when all of that is taken away?

Yuval Noah Harari asks an important question:

“Humans think about life as a drama full of decision-making, what will be the meaning of human life if most decisions are taking by algorithms?”

In absorbing ChatGPT into itself, Microsoft gushed:

“The scope of commercial and creative potential that can be unlocked through the GPT-3 model is profound, with genuinely novel capabilities – most of which we haven’t even imagined yet.”

Yes, so noble.

But here’s their real goal.

The partnership allows Microsoft to compete with Google’s DeepMind AI company.

Think about it. Here we all are. Sitting ducks, being invaded by AI who is harvesting our data. These guys manipulate us like chess pieces, jousting furiously with one another for first place in the data gathering game. Whatever any of them says about how wonderful it will all be for you and me, it’s just another lie.

With all of this happening, don’t forget the part where AI can come up with fake news. I will say it again:

In 2019, the company built an AI tool that could craft fake news stories.

Well, think about how much advantage they can take of this new product. Humans will need other products to tell them whether or not what they are reading or seeing is fake. This is crazy stuff, and I don’t want to be a part of it.

But let’s all obsess about the Twitter Files and “free speech” and how once upon a time bad actors labeled truth as fake and now Elon Musk, the “truth absolutist,” is promising that everyone will be able, at last, to be free to say whatever they want—within reason, but whose reason?

Don’t worry, it’ll be okay because whoever wins the data game will then give AI the task of telling you what’s fake and what’s true—the very AI that can come up with fakery so good, it can fool anyone.

Oh, the irony. Keep that free speech going. AI loves it.